Location

CA

CA

Badges

Activity

Challenge Categories

Challenges Entered

Play in a realistic insurance market, compete for profit!

Latest submissions

See All| graded | 127198 | ||

| graded | 127192 | ||

| graded | 127175 |

| Participant | Rating |

|---|---|

nick_corona

nick_corona

|

0 |

Djiomou

Djiomou

|

0 |

| Participant | Rating |

|---|

Insurance pricing game

Time of town hall

Almost 4 years agoI was refering to that page: https://www.aicrowd.com/challenges/insurance-pricing-game/leaderboards?challenge_leaderboard_extra_id=662&challenge_round_id=625

Time of town hall

Almost 4 years agoHi, in the invite it says 5 PM GMT. On the final leaderboard page it says 12PM EST, however I think because of North America changing the time sooner than Europe 5PM GMT is actually 1PM EST on 2021-03-21.

Let me know

It's (almost) over! sharing approaches

About 4 years agoI agree, otherwise I’d like to have access to that data as well haha.

It's (almost) over! sharing approaches

About 4 years agoThat’s clever. Didn’t think about that. I could have used the prior version of my model for the ~40K without history and my new model including prior claims for the ~60K with history.

Instead, I did bundle the ~40K new one with the no claim bunch. (which is not so bad because for year 1 everybody is assumed to be without a prior claim).

Anyway, fingers crossed

It's (almost) over! sharing approaches

About 4 years agoThat is smart, it for sure can help decrease some instability in the profit. I clearly didn’t spend enough time in those analysis.

It's (almost) over! sharing approaches

About 4 years agoWhat seems to have worked for me is the binning and capping of numerical variables usually in 10 approximately equal buckets. I didn’t want the model to overfit on small portion of the data. (split on top_speed 175 and then a split on top speed 177. Which would mean basically one hot encoding the speed 176).

I also created indicators variables for the 20 most popular cars. I wasn’t sure how to do target encoding without overfitting on the make_model with not a lot of exposure.

I created a indicator variable for weight = 0. Not sure what those were but they were behaving differently.

For the final week, at the cost of worsening my RMSE a bit on the public leaderboard, I included real claim_count and yrs_since_last_claim features (by opposition to claim discount which is not affected by all claims). Fingers crossed that this will provide an edge. It was quite predictive, however will only be available for ~60% of the final dataset. And the average prediction for those with 0 (which would be the case for the ~40% new in the final dataset) was not decreased by too much… The future will tell us.

Since I was first in the week 10 leaderboard, I decided not to touch the pricing layer. Didn’t want to jinx it.

Good luck everyone

About 4 years agoI had a lot of fun trying out this competition! I learned a lot along the way. Thank you for everything.

And for those who haven’t finalized their submission yet, this is the final sprint!

Some final thoughts and feedback

About 4 years agoI had the same idea, I manually found two cases which was I believe the Chevrolet Aveo and the Nissan Micra. I thought it was too much time and I’m not sure where I would go to source an european vehicle database.

Some final thoughts and feedback

About 4 years agoI was wondering if this was allowed by the ask to be able to retrain the model without workarounds.

Final data question

About 4 years agoHi,

Question on the composition of the final dataset.

It says it’s around 100k policies on the 5th year. Some in the training and some new.

Looking at the numbers, Training: 60K, RMSE: 5K, 10 Weekly: 30K.

I’m wondering if the data is the 5th year of all of those policies? So the “new to you” would be from the fact that we didn’t actually ever see the data for the policies in RMSE and 10 weekly sets.

If I’m understanding correctly, the pol_sit_duration would be at least 5, because in the training data pol_sit_duration is never smaller than year?

Review of week 4

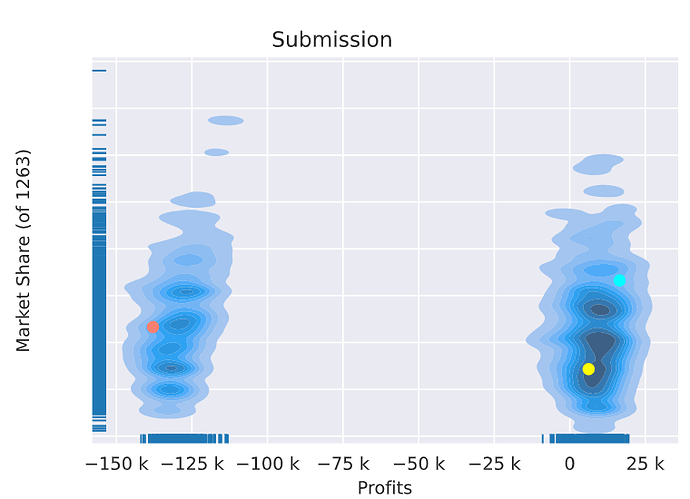

About 4 years agoHere is a excerpt of the summary of my week 4 performance. It looks like a single 150k$ claim that I “won” in 25-30% of my markets is explaining the poor performance for this week. At least that’s what I presume since there’s a very big gap between losing and profitable markets. Interested to know if anybody else saw womething similar this week?

Submission taking too long to evaluate

About 4 years agoSame thing here, submission 114248 Submitted yesterday evening. Still stuck

Description of weekly feedback

About 4 years agoHi, this will be valuable feedback i think! However I have a couple of questions:

-

Am I understanding correctly that this model only wins policies for which there is a driver 2?

-

In the point 2 often and sometimes have the same interval. Is this an error?

-

Also am I understanding correctly that in this model case, every policy was at least won in 1.1% of the markets?

-

Finally, is this based on the second pas which the model is only compared against the 10% best competitors?

RMSE training vs leaderboard

About 4 years agoI’m my training set the RMSE is a lot higher than on the leaderboard (out of sample). I’m wondering if it’s like that for others as well. Is there simply less extreme claims in the out of sample?

Submission error: " [bt] (6) /usr"

About 4 years agoOk, so I did more experimenting. It looks like I was able to successfully send my submission by doing 3 things:

- Completely reset the colab notebook using restart runtime under the runtime menu.

- Using the trick above to install version 1.2.0.1 of XGBoost

- Use save() and load() instead of xgb.save() and xgb.load()

Hope it can help

Submission error: " [bt] (6) /usr"

About 4 years agoIsn’t it weird that it happens with 4-5 of us on the same day and we’re all using xgboost?

Submission error: " [bt] (6) /usr"

About 4 years agoHi, @jyotish

Looks like it’s not the first time this error pops up. Do you know if/how it was fixed last time?

Last time discussion

Submission error: " [bt] (6) /usr"

About 4 years agoI tried replacing install.packages(“xgboost”) by

packagexg <- "http://cran.r-project.org/src/contrib/Archive/xgboost/xgboost_1.2.0.1.tar.gz" install.packages(packagexg, repos=NULL, type="source")

Without sucess. The subsmission still produced the same error

Challenge with the given details could not be found

About 4 years ago(topic withdrawn by author, will be automatically deleted in 24 hours unless flagged)

Announcing a bonus round while you wait!

Almost 4 years ago@alfarzan

Should we be able to pick our submission for the bonus profit round?

The submission picker says that it’s over