Activity

Challenge Categories

Challenges Entered

Play in a realistic insurance market, compete for profit!

Latest submissions

See All| graded | 125543 | ||

| graded | 125479 | ||

| graded | 125125 |

| Participant | Rating |

|---|---|

lolatu2

lolatu2

|

0 |

michael_bordeleau

michael_bordeleau

|

0 |

s_charlesworth

s_charlesworth

|

0 |

| Participant | Rating |

|---|---|

nigel_carpenter

nigel_carpenter

|

0 |

michael_bordeleau

michael_bordeleau

|

0 |

Insurance pricing game

Ideas for an eventual next edition

Almost 4 years agoHey all,

I’m sure lots of people have ideas for a future edition. I thought now might be the last chance to discuss them.

Here are mine:

- Change the way we are given the training data set so that we are always tested “in the future”. This would involve gradually feeding us a larger training set . It would look like this. Let’s say the whole dataset is 5 years and is split in folds A B C D, E and F(a policy_id is always in the same fold).

Week 1 : we train on Year1 for folds A,B, C and D. We are tested on year2 for folds A,B and E.

Week 2: same training data set, but we are tested on year2 for folds, C, D and F.

Week 3: NEW TRAINING data: we now have access to year 1 and 2 for folds A,B,C,D and we are tested on year 3 for folds A,B and E

Week 4: same training data, tested on year 3 for folds C,D and F

Week5: New training data: we now have access to year 1-2-3 for folds A,B,C,D, tested on year 4 A,B and E

Week 6: same training data, tested on year 4 for folds C,D and F.

Week 7: new training data: we now have the full training data set (year 1-2-3-4) , tested on year 5 for folds A,B and E

Final WEEK : same training data, tested on year 5 of folds C, D and F.

a big con is that inactive people would need to at least refit their data on weeks 3 , 5 and 7. A solution would be to have Ali & crew refit inactive peoples on the new training set using the fit_model() functioin.

-

I wouldnt do a “cumulative” profit approach because a bad week would disqualify people and would make them create a new account to start from scratch, which wouldn’t be fun and also would be hell to monitor. However, a “championship” where you accumulate points like in Formula 1 could be interesting. A “crash” simply means you earn 0 point. I’d only start accumulating points about halfway through the championship so that early adopters don’t have too big of an advantage. I’d also give more points for the last week to keep the suspense.

-

Provide a bootstrapped estimate of the variance for the leaderboard by generating a bunch of “small test sets” sampled from the “big test set”.

-

Really need to find a way to give better feedback, but I can’t think of a non-hackable way.

-

We need to find a way that “selling 1 policy and hoping it doesnt make a claim” is no longer a strategy that can secure a spot in the top 12. A simple fix is disqualifying companies with less than 1/5 of a normal market share (in our case 10% / 5= 2%), but I’d rather find something less arbitraty.

2nd place solution

Almost 4 years ago(post withdrawn by author, will be automatically deleted in 24 hours unless flagged)

One more post about bugs

Almost 4 years agoI’m sorry to see this happened to you, this sucks. (and I’m guessing the folks at aicrowd feel terrible)

The heatmap is weird – shouldnt you be at “100% often” since you got a 100% market share?

A thought for people with frequency-severity models

Almost 4 years agomore reading on the pile - thanks for the link (and the summary) !

A thought for people with frequency-severity models

Almost 4 years agoI mean adding a features named “predicted_frequency” to the severity model and checking if that improves the severity model.

I thought about this because back in the days I was interested in a different type of discrete-continuous model: “which type of heating system do people have in their house” and “how much gas do they use if they picked gas?” and the predicted probability for all other systems would work it’s way into the gas consumption model to correct some bias. (Dubin and McFadden 1984, don’t read it) : https://econ.ucsb.edu/~tedb/Courses/GraduateTheoryUCSB/mcfaddendubin.pdf

An example explanation then would be "if you picked gas (higher cost up front than electricity, lower cost per energy unit, so only economical when you need a lot of energy) despite having a really small house (measured) then you probably have a really crappy insulation (not measured) and will probably consume more energy than would have been predicted only from your small square footage.

In that case, the estimate of the coefficient for the relation between “square footage” and “gas consumption” would be biaised downward since all big houses get gas, but only badly insulated small houses get gas.

It’s not the same purpose, but maybe there’s some signal left.

I didnt think about this for long - this might be part of my 111 out of 112 ideas that are useless

Legal / ethical aspects and other obligation

Almost 4 years ago(post withdrawn by author, will be automatically deleted in 24 hours unless flagged)

A thought for people with frequency-severity models

Almost 4 years agoDid you try adding the predicted frequency to the severity model?

Maybe “being unlikely to make a claim” means you only call your insurer after a disaster?

Legal / ethical aspects and other obligation

Almost 4 years agoI’ve also heard that European actuaries eat babies. I’m not asking for confirmation, that one has been confirmed.

Legal / ethical aspects and other obligation

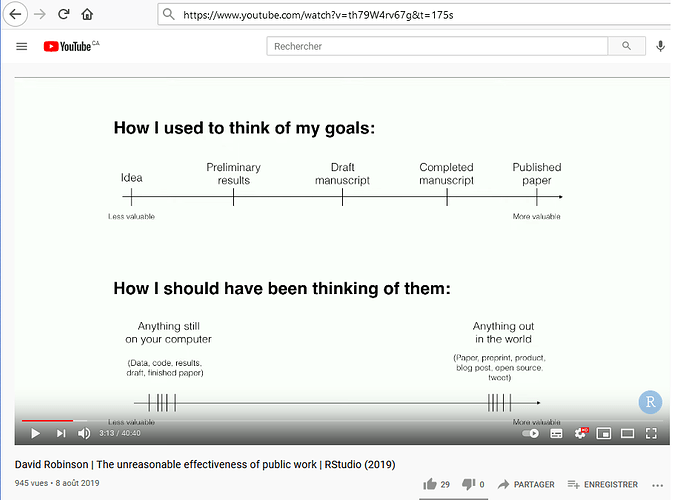

Almost 4 years agothanks mate! I’m a big believer in the value of “learning of public”. Someomes I look like a fool, but much more often I get some really cool insight from knowledgeable people I wouldnt have received otherwise. Overall, it’s totally worth it

edit: also, this:

Legal / ethical aspects and other obligation

Almost 4 years agoI’ve heard rumors of European insurers charging different price depending on the day you were born (Monday, Thursday…) to allow them to calculate price elasticities. Would love to have it confirmed or denied though.

Sharing of industrial practice

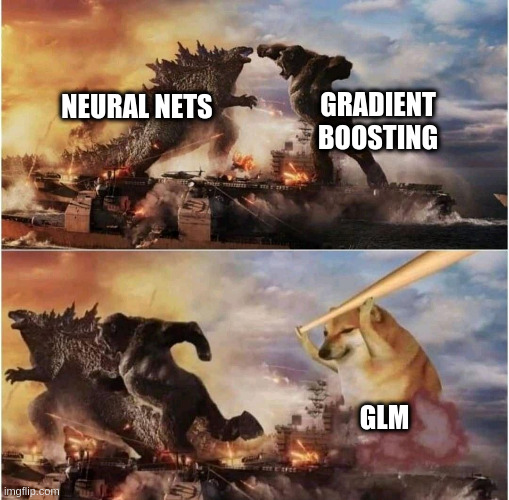

Almost 4 years agoNot an actuary, but from what I’ve seen in 3 insurance companies, the pricing is also mostly GLMs in Canada.

This is mostly due to the regulators, which vary by province. Non-pricing models such as fraud detection, churn probability or conversion rate can lean more towards machine learning and less towards interpretability.

Who else got a call from Ali?

Almost 4 years agoHaha!

I meant to joke that I had won but summoning you from a star pattern painted on the floor with burning candles at each points work too.

I had forgot about the debugging everyone part - sorry you’re having a terrible week.

"Asymmetric" loss function?

Almost 4 years agowow… wow!

Thanks for taking the time to dig into this and share your results. I’ve only been cheerleading so far, but all the work you and @Calico have shared is really interesting  Super cool to have Calico’s initial idea of linear increase show up in @guillaume_bs 's simulation.

Super cool to have Calico’s initial idea of linear increase show up in @guillaume_bs 's simulation.

I’m trying to come up with a simulation where there are 2 insurers and 2 groups of clients. Group A and B have the same average, but group B is much more variable. One insurer is aware of that, but the other is not. How bad is the unaware insurer going to get hurt? I’ll let this marinate for a bit

"Asymmetric" loss function?

Almost 4 years agoreally nice work!

12% seems pretty low compared to what most folks ended up charging. I’ll go back to the solution sharing thread to see if you posted your final %

"Asymmetric" loss function?

Almost 4 years agoThat’s super cool!

Doesnt really have to be an “asymmetric loss function”, just something that reflects the fact that I’m more careful when giving rebates than when I am charging more.

Your model ended up loading a higher percentage to policies with lower predicted claims and that works with the spirit of what I’m looking for

As a percentage of the premium, what did 2 times the standard deviation typically represent on an average premium of 100$ ?

CA

CA

Access to the test data?

Almost 4 years agoHey @alfarzan & friends

I was wondering if it would be possible to have access to the test data now that the competition is over.

I’d like to have the opportunity to score the shared solutions and simulate my own little market to get better feedback on what happened without asking you to do more work.

cheers