Location

RU

RU

Badges

Activity

Ratings Progression

Challenge Categories

Challenges Entered

Multi-Agent Reinforcement Learning on Trains

Latest submissions

See All| failed | 85972 | ||

| failed | 83770 | ||

| failed | 83759 |

Multi Agent Reinforcement Learning on Trains.

Latest submissions

See All| graded | 32808 | ||

| graded | 32782 | ||

| graded | 32738 |

Multi-Agent Reinforcement Learning on Trains

Latest submissions

| Participant | Rating |

|---|---|

student

student

|

271 |

microset

microset

|

0 |

| Participant | Rating |

|---|

Flatland Challenge

Malfunction data generator does not work

About 5 years agoHi @mlerik!

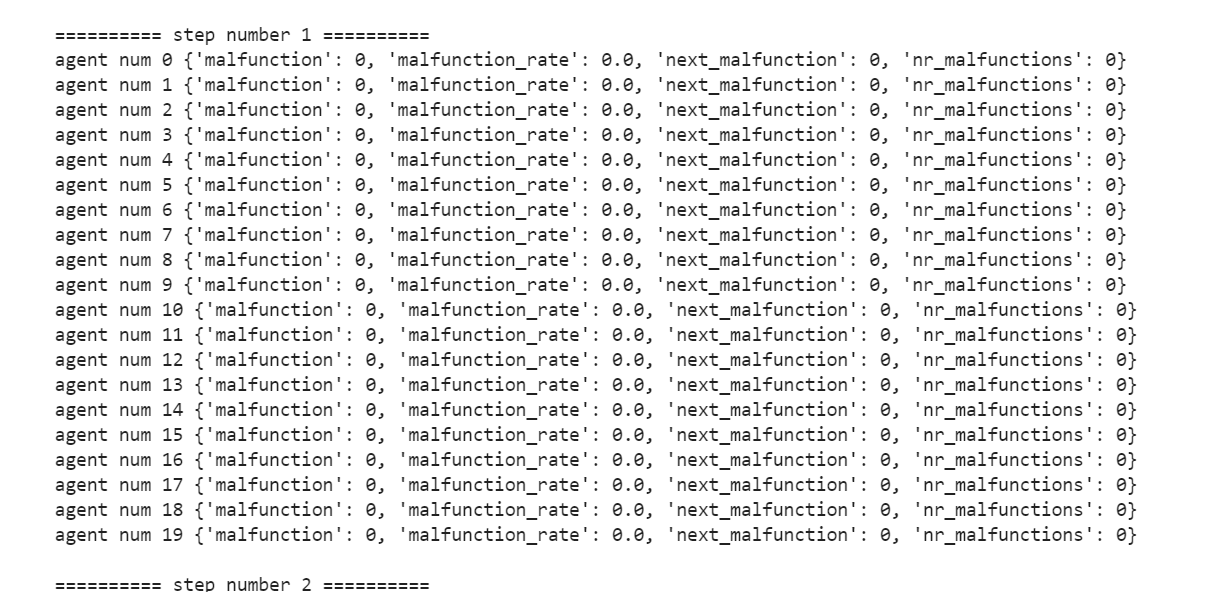

Please, check the behavior of the last agent in my new simulation. It seems that new_malfunction is not shown correctly on step number 5. I see that this problem occurs on the first steps only (but all agents have entered the environment).

import time

import numpy as np

from flatland.envs.observations import TreeObsForRailEnv, GlobalObsForRailEnv

from flatland.envs.predictions import ShortestPathPredictorForRailEnv

from flatland.envs.rail_env import RailEnv

from flatland.envs.rail_generators import sparse_rail_generator

from flatland.envs.schedule_generators import sparse_schedule_generator

from flatland.utils.rendertools import RenderTool, AgentRenderVariant

np.random.seed(1)

stochastic_data = {'prop_malfunction': 0.5, # Percentage of defective agents

'malfunction_rate': 30, # Rate of malfunction occurence

'min_duration': 3, # Minimal duration of malfunction

'max_duration': 10 # Max duration of malfunction

}

TreeObservation = TreeObsForRailEnv(max_depth=2, predictor=ShortestPathPredictorForRailEnv())

speed_ration_map = {1.: 0.25, # Fast passenger train

1. / 2.: 0.25, # Fast freight train

1. / 3.: 0.25, # Slow commuter train

1. / 4.: 0.25} # Slow freight train

env = RailEnv(width=60,

height=60,

rail_generator=sparse_rail_generator(max_num_cities=12,

# Number of cities in map (where train stations are)

seed=14, # Random seed

grid_mode=False,

max_rails_between_cities=2,

max_rails_in_city=6,

),

schedule_generator=sparse_schedule_generator(speed_ration_map),

number_of_agents=5,

stochastic_data=stochastic_data, # Malfunction data generator

obs_builder_object=GlobalObsForRailEnv(),

remove_agents_at_target=True

)

obs = env.reset()

MY_ACTION = []

MY_ACTION.append({0: 4, 1: 2, 2: 4, 3: 4, 4: 2})

MY_ACTION.append({0: 2, 1: 2, 2: 2, 3: 2, 4: 2})

MY_ACTION.append({0: 2, 1: 2, 2: 2, 3: 2, 4: 2})

MY_ACTION.append({0: 2, 1: 2, 2: 2, 3: 2, 4: 2})

MY_ACTION.append({0: 2, 1: 2, 2: 3, 3: 2, 4: 4})

MY_ACTION.append({0: 2, 1: 2, 2: 1, 3: 2, 4: 2})

MY_ACTION.append({1: 4, 4: 4})

for step in range(7):

print("========== step number ", step, " ==========", sep = "")

action_dict = MY_ACTION[step]

print("my action ", action_dict)

for ind in range(env.get_num_agents()):

print(env.agents[ind].malfunction_data)

next_obs, all_rewards, done, _ = env.step(action_dict)

Malfunction data generator does not work

About 5 years agoWell, I have already downloaded and tested my code with this branch.

Firstly, I want to say that this branch uses gym utils (so, I had to download them to continue) - I saw an isssue with this problem.

Secondly, I send you a report with malfunction information. I did not explore it deeply, but found that there are some mistakes. As you can see, malfunction length do not update on the first steps.

Start episode…

0.9744243621826172

5

========== step number 0 ==========

0 [True, True, True, True, True] [2, 4, 0, 1, 3]

My action: {0: 4, 1: 4, 2: 4, 3: 4, 4: 4}

agent num 0 {‘malfunction’: 4, ‘malfunction_rate’: 30, ‘next_malfunction’: 56, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 6, ‘malfunction_rate’: 30, ‘next_malfunction’: 19, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 11, ‘malfunction_rate’: 30, ‘next_malfunction’: 55, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 7, ‘malfunction_rate’: 30, ‘next_malfunction’: 1, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 7, ‘malfunction_rate’: 30, ‘next_malfunction’: 6, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}========== step number 1 ==========

My action: {0: 4, 1: 4, 2: 4, 3: 4, 4: 4}

agent num 0 {‘malfunction’: 4, ‘malfunction_rate’: 30, ‘next_malfunction’: 56, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 6, ‘malfunction_rate’: 30, ‘next_malfunction’: 19, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 11, ‘malfunction_rate’: 30, ‘next_malfunction’: 55, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 7, ‘malfunction_rate’: 30, ‘next_malfunction’: 1, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 7, ‘malfunction_rate’: 30, ‘next_malfunction’: 6, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}========== step number 2 ==========

My action: {0: 4, 1: 4, 2: 4, 3: 4, 4: 4}

agent num 0 {‘malfunction’: 4, ‘malfunction_rate’: 30, ‘next_malfunction’: 56, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 6, ‘malfunction_rate’: 30, ‘next_malfunction’: 19, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 11, ‘malfunction_rate’: 30, ‘next_malfunction’: 55, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 7, ‘malfunction_rate’: 30, ‘next_malfunction’: 1, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 7, ‘malfunction_rate’: 30, ‘next_malfunction’: 6, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}========== step number 3 ==========

My action: {0: 4, 1: 4, 2: 4, 3: 4, 4: 4}

agent num 0 {‘malfunction’: 4, ‘malfunction_rate’: 30, ‘next_malfunction’: 56, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 6, ‘malfunction_rate’: 30, ‘next_malfunction’: 19, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 11, ‘malfunction_rate’: 30, ‘next_malfunction’: 55, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 7, ‘malfunction_rate’: 30, ‘next_malfunction’: 1, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 7, ‘malfunction_rate’: 30, ‘next_malfunction’: 6, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}========== step number 4 ==========

My action: {0: 2, 1: 4, 2: 4, 3: 4, 4: 4}

agent num 0 {‘malfunction’: 3, ‘malfunction_rate’: 30, ‘next_malfunction’: 55, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 6, ‘malfunction_rate’: 30, ‘next_malfunction’: 19, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 11, ‘malfunction_rate’: 30, ‘next_malfunction’: 55, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 7, ‘malfunction_rate’: 30, ‘next_malfunction’: 1, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 7, ‘malfunction_rate’: 30, ‘next_malfunction’: 6, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}========== step number 5 ==========

My action: {0: 2, 1: 4, 2: 4, 3: 4, 4: 4}

agent num 0 {‘malfunction’: 2, ‘malfunction_rate’: 30, ‘next_malfunction’: 54, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 6, ‘malfunction_rate’: 30, ‘next_malfunction’: 19, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 11, ‘malfunction_rate’: 30, ‘next_malfunction’: 55, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 7, ‘malfunction_rate’: 30, ‘next_malfunction’: 1, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 7, ‘malfunction_rate’: 30, ‘next_malfunction’: 6, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}========== step number 6 ==========

My action: {0: 2, 1: 2, 2: 4, 3: 4, 4: 4}

agent num 0 {‘malfunction’: 1, ‘malfunction_rate’: 30, ‘next_malfunction’: 53, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 5, ‘malfunction_rate’: 30, ‘next_malfunction’: 18, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 11, ‘malfunction_rate’: 30, ‘next_malfunction’: 55, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 7, ‘malfunction_rate’: 30, ‘next_malfunction’: 1, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 7, ‘malfunction_rate’: 30, ‘next_malfunction’: 6, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}========== step number 7 ==========

My action: {0: 2, 1: 2, 2: 4, 3: 2, 4: 2}

agent num 0 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 52, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 4, ‘malfunction_rate’: 30, ‘next_malfunction’: 17, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 11, ‘malfunction_rate’: 30, ‘next_malfunction’: 55, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 6, ‘malfunction_rate’: 30, ‘next_malfunction’: 0, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 6, ‘malfunction_rate’: 30, ‘next_malfunction’: 5, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}========== step number 8 ==========

My action: {0: 2, 1: 2, 2: 4, 3: 2, 4: 2}

agent num 0 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 51, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 3, ‘malfunction_rate’: 30, ‘next_malfunction’: 16, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 11, ‘malfunction_rate’: 30, ‘next_malfunction’: 55, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 5, ‘malfunction_rate’: 30, ‘next_malfunction’: 0, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 5, ‘malfunction_rate’: 30, ‘next_malfunction’: 4, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}========== step number 9 ==========

My action: {0: 2, 1: 2, 2: 4, 3: 2, 4: 1}

agent num 0 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 50, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 2, ‘malfunction_rate’: 30, ‘next_malfunction’: 15, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 11, ‘malfunction_rate’: 30, ‘next_malfunction’: 55, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 4, ‘malfunction_rate’: 30, ‘next_malfunction’: 0, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 4, ‘malfunction_rate’: 30, ‘next_malfunction’: 3, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}========== step number 10 ==========

My action: {0: 3, 1: 2, 2: 4, 3: 2, 4: 3}

agent num 0 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 49, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 1, ‘malfunction_rate’: 30, ‘next_malfunction’: 14, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 11, ‘malfunction_rate’: 30, ‘next_malfunction’: 55, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 3, ‘malfunction_rate’: 30, ‘next_malfunction’: 0, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 3, ‘malfunction_rate’: 30, ‘next_malfunction’: 2, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}========== step number 11 ==========

My action: {0: 3, 1: 2, 2: 2, 3: 2, 4: 1}

agent num 0 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 48, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 13, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 10, ‘malfunction_rate’: 30, ‘next_malfunction’: 54, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 2, ‘malfunction_rate’: 30, ‘next_malfunction’: 0, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 2, ‘malfunction_rate’: 30, ‘next_malfunction’: 1, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}========== step number 12 ==========

My action: {0: 1, 1: 2, 2: 2, 3: 2, 4: 3}

agent num 0 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 47, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 12, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 9, ‘malfunction_rate’: 30, ‘next_malfunction’: 53, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 1, ‘malfunction_rate’: 30, ‘next_malfunction’: 0, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 1, ‘malfunction_rate’: 30, ‘next_malfunction’: 0, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}========== step number 13 ==========

My action: {0: 4, 1: 2, 2: 2, 3: 4, 4: 2}

agent num 0 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 46, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 11, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 8, ‘malfunction_rate’: 30, ‘next_malfunction’: 52, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 0, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 0, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}========== step number 14 ==========

My action: {0: 4, 1: 4, 2: 4, 3: 4, 4: 4}

agent num 0 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 45, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 10, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 7, ‘malfunction_rate’: 30, ‘next_malfunction’: 51, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 5, ‘malfunction_rate’: 30, ‘next_malfunction’: 29, ‘nr_malfunctions’: 2, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 9, ‘malfunction_rate’: 30, ‘next_malfunction’: 100, ‘nr_malfunctions’: 2, ‘moving_before_malfunction’: True}========== step number 15 ==========

0 [False, False, False, False, False] [2, 4, 0, 1, 3]

1 [False, False, False, False, False] [3, 4, 1, 0, 2]

2 [False, False, False, False, False] [0, 3, 1, 2, 4]

3 [False, False, False, False, False] [2, 4, 1, 0, 3]

4 [False, False, False, False, False] [3, 2, 1, 4, 0]

5 [False, False, False, False, False] [3, 2, 1, 0, 4]

6 [False, False, False, False, False] [0, 3, 2, 4, 1]

7 [False, False, False, False, False] [2, 4, 0, 1, 3]

8 [False, False, False, False, False] [1, 0, 3, 4, 2]

9 [False, False, False, False, False] [2, 4, 3, 0, 1]

My action: {2: 4, 3: 4, 4: 4}

agent num 0 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 44, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 9, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 6, ‘malfunction_rate’: 30, ‘next_malfunction’: 50, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 4, ‘malfunction_rate’: 30, ‘next_malfunction’: 28, ‘nr_malfunctions’: 2, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 8, ‘malfunction_rate’: 30, ‘next_malfunction’: 99, ‘nr_malfunctions’: 2, ‘moving_before_malfunction’: True}========== step number 16 ==========

My action: {2: 4, 3: 4, 4: 4}

agent num 0 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 43, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 8, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 5, ‘malfunction_rate’: 30, ‘next_malfunction’: 49, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 3, ‘malfunction_rate’: 30, ‘next_malfunction’: 27, ‘nr_malfunctions’: 2, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 7, ‘malfunction_rate’: 30, ‘next_malfunction’: 98, ‘nr_malfunctions’: 2, ‘moving_before_malfunction’: True}========== step number 17 ==========

My action: {2: 4, 3: 4, 4: 4}

agent num 0 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 42, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 7, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 4, ‘malfunction_rate’: 30, ‘next_malfunction’: 48, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 2, ‘malfunction_rate’: 30, ‘next_malfunction’: 26, ‘nr_malfunctions’: 2, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 6, ‘malfunction_rate’: 30, ‘next_malfunction’: 97, ‘nr_malfunctions’: 2, ‘moving_before_malfunction’: True}========== step number 18 ==========

My action: {2: 4, 3: 4, 4: 4}

agent num 0 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 41, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 6, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 3, ‘malfunction_rate’: 30, ‘next_malfunction’: 47, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 1, ‘malfunction_rate’: 30, ‘next_malfunction’: 25, ‘nr_malfunctions’: 2, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 5, ‘malfunction_rate’: 30, ‘next_malfunction’: 96, ‘nr_malfunctions’: 2, ‘moving_before_malfunction’: True}========== step number 19 ==========

My action: {2: 4, 4: 4}

agent num 0 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 40, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 5, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 2, ‘malfunction_rate’: 30, ‘next_malfunction’: 46, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 24, ‘nr_malfunctions’: 2, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 4, ‘malfunction_rate’: 30, ‘next_malfunction’: 95, ‘nr_malfunctions’: 2, ‘moving_before_malfunction’: True}========== step number 20 ==========

My action: {2: 4, 4: 4}

agent num 0 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 39, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 4, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 1, ‘malfunction_rate’: 30, ‘next_malfunction’: 45, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 23, ‘nr_malfunctions’: 2, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 3, ‘malfunction_rate’: 30, ‘next_malfunction’: 94, ‘nr_malfunctions’: 2, ‘moving_before_malfunction’: True}========== step number 21 ==========

My action: {4: 4}

agent num 0 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 38, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 3, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 44, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 22, ‘nr_malfunctions’: 2, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 2, ‘malfunction_rate’: 30, ‘next_malfunction’: 93, ‘nr_malfunctions’: 2, ‘moving_before_malfunction’: True}========== step number 22 ==========

My action: {4: 4}

agent num 0 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 37, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 2, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 43, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 21, ‘nr_malfunctions’: 2, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 1, ‘malfunction_rate’: 30, ‘next_malfunction’: 92, ‘nr_malfunctions’: 2, ‘moving_before_malfunction’: True}========== step number 23 ==========

My action: {}

agent num 0 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 36, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 1 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 1, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 2 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 42, ‘nr_malfunctions’: 1, ‘moving_before_malfunction’: False}

agent num 3 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 20, ‘nr_malfunctions’: 2, ‘moving_before_malfunction’: False}

agent num 4 {‘malfunction’: 0, ‘malfunction_rate’: 30, ‘next_malfunction’: 91, ‘nr_malfunctions’: 2, ‘moving_before_malfunction’: True}Episode: Steps 23 Score = -80.0

Malfunction data generator does not work

About 5 years agoHi mlerik

Just on every step…

Note that it is the latest master branch. If you want to test something else, I have some time right now.

Malfunction data generator does not work

About 5 years agoHi everyone!

I would like to point out that malfunction data generator in latest FLATland version (for example in flatland_2_0_example.py) does not work at all. I tried to set the maximum percentage of defective agents parameter but didn`t get a single occurence.

Please add this feature again. Thank you!

Rewards function calculation bug

About 5 years agoWell, actually I wanted to clarify the process of calculating the final score of our submissions.

I guess that you use this reward function to calculate optimality of solution in Round 1, as long as I found this line in run.py script:

if done['__all__']: print("Reward : ", sum(list(all_rewards.values())))

So, if you still use this approach (and you definitely use it in flatland_2_0_example.py), the score function is calculated incorrectly.

For example, I can order some agents not to move and enter the environment. In this case, they have no impact on total penalty, so the final score reduces (which of course is incorrect) - I can describe this with more details, if you want.

Thus, there is a bug in score calculation, which can be fixed by changing the default rewards function or making anything else.

Sorry for any of misunderstandings.

Rewards function calculation bug

About 5 years agoHi FLATland team!

I want to report a bug in rewards function (latest commit - 05.10).

I found that the system do not count any of score penalty (reward) of an agent if it has not started moving and formally does not exist on a map. It means that agent can wait in it`s start point for some steps for free.

Please, add rewards calculation for non-spawned agents like they are waiting.

Suggestion/bug fix of Flatland 2.0

About 5 years agoHi flatland team!

Just a few questions about current version of Flatland.

- I found that sometimes

agent.malfunction_data['next_malfunction']parameter can be below zero. It happens when the next malfunction for a single agent occurs before current ended (I can send a simulation code, if you want). So, a flatland user have to write one extra line to find out anext_malfunctionvalue. Personally I use this code:

next_malfunction = max(self.env.agents[ind].malfunction_data['next_malfunction'], self.env.agents[ind].malfunction_data['malfunction'] + 1)

Please, fix your next_malfunction output with something like that to avoid any of misunderstandings.

-

And one more question. Do you want to allow the agent to wait (with STOP_MOVING status) when its

position_fractionis upper than zero - I mean when the agent started to move between two cells? I ask this as long as right now agents are unable to do that, which looks not so logical. -

What values of

malfunction_rateandmax_durationwill you set in simulations (sorry, if I ask this too early).

P. S. Thank you for past bug fixs. Now, it is possible to make full solutions for round 2. You`ve done a great job!

Reporting Bugs in Flatland

About 5 years agoHi everyone!

I would like to report a bug of Flatland 2.0, connected with stochastic events.

As you can see, a stochastic event occurs with my agent on the first step. Then my agent waits for several steps, sending to the system “4” (or nothing).

However, when this stochastic event ends, something throws the agent out of railway system (or out of a map in my example). You can see that position of the agent is (-1, 2).

Note that this problem occurs in many different cases when an agent waits during stochastic event is ending.

Simulate the bug with following code (just copy to jupyter):

import time

from flatland.envs.observations import TreeObsForRailEnv

from flatland.envs.predictions import ShortestPathPredictorForRailEnv

from flatland.envs.rail_env import RailEnv

from flatland.envs.rail_generators import sparse_rail_generator

from flatland.envs.schedule_generators import sparse_schedule_generator

from flatland.utils.rendertools import RenderTool

stochastic_data = {'prop_malfunction': 1., # Percentage of defective agents

'malfunction_rate': 70, # Rate of malfunction occurence

'min_duration': 2, # Minimal duration of malfunction

'max_duration': 5 # Max duration of malfunction

}

speed_ration_map = {1.: 1., # Fast passenger train

1. / 2.: 0., # Fast freight train

1. / 3.: 0., # Slow commuter train

1. / 4.: 0.} # Slow freight train

env = RailEnv(width=25,

height=30,

rail_generator=sparse_rail_generator(num_cities=5, # Number of cities in map (where train stations are)

num_intersections=4, # Number of intersections (no start / target)

num_trainstations=25, # Number of possible start/targets on map

min_node_dist=6, # Minimal distance of nodes

node_radius=3, # Proximity of stations to city center

num_neighb=3, # Number of connections to other cities/intersections

seed=215545, # Random seed

grid_mode=True,

enhance_intersection=False

),

schedule_generator=sparse_schedule_generator(speed_ration_map),

number_of_agents=1,

stochastic_data=stochastic_data, # Malfunction data generator

)

env_renderer = RenderTool(env)

env_renderer.render_env(show=True, frames=False, show_observations=False)

_action = dict()

for step in range(4):

#_action[0] = 4

obs, all_rewards, done, info = env.step(_action)

print(info["malfunction"][0])

print(env.agents[0].position)

env_renderer.render_env(show=True, frames=False, show_observations=False)

time.sleep(0.1)

print("Here position of the agent is invalid")

Thank you for fixing this bug. Hope, it won`t be difficult.

[ANNOUNCEMENT] Start Round 2

About 5 years agoHi!

It seems that the Example link is unavailable. Could you please fix that.