Location

CA

CA

Badges

Activity

Challenge Categories

Challenges Entered

Measure sample efficiency and generalization in reinforcement learning using procedurally generated environments

Latest submissions

A dataset and open-ended challenge for music recommendation research

Latest submissions

Sample-efficient reinforcement learning in Minecraft

Latest submissions

Sample-efficient reinforcement learning in Minecraft

Latest submissions

| Participant | Rating |

|---|

| Participant | Rating |

|---|

NeurIPS 2020: MineRL Competition

Preprocessing rule

Over 5 years agoHi all,

We are discussing with our advisors and team! As you can tell this is a difficult line to draw.

Will

[Announcement] MineRL 0.3.1 Released!

Over 5 years ago@everyone MineRL 0.3.1 Released!

After several weeks of late nights and bug squashing, @imushroom1 and I are excited

to announce the release of MineRL 0.3.1 and the MineRL 2020 competition package & dataset!

This update is a largely technical one in a series of many updates we’ll be pushing out which refactor and drastically improve the API underlying MineRL. In the coming months, we will slowly move away from Malmo to our own Minecraft simulator in addition to releasing a large amount of our

closed-source data recording and pipelining infrastructure.

To get started:

pip install --upgrade minerl

python -m minerl.data.download

See new envs & data (https://minerl.io/docs/environments/index.html#competition-environments)!

Major changes:

* Released MineRLObtainDiamondVectorObf-v0 (and other obfuscated environments for the competition! See http://minerl.io)

* Released new V3 dataset containing both obfuscated and standard env data.

* Internally refactored the MineRL API to use env handlers.

* Unified the data publishing and environment specification API

* Forced all spaces to return Numpy arrays and scalars.

* Open sourced the data publishing pipeline

* Added forbidden streams to the data pipeline

* Rewrote the MineRL viewer to be extensible and support rendering for competition streams

* Rehosted the data on S3, drastically increasing

* Made Asia and Europe mirrors for the dataset.

* Made new MineRL download method (supports sharding and mirrors)

NeurIPS 2019 : MineRL Competition

[IMPORTANT] Announcement - MineRL 0.2.5 Released!

About 6 years ago@everyone Hello! Happy to announce that minerl==0.2.5 is now out! If you are a competitor it is crucial that you upgrade to this version and retrain your models as it fixes a reward loop which led to arbitrarily high rewards on the leaderboard.

Make sure to

pip3 install --upgrade minerl

Changes in minerl==0.2.5

- Issue #230. Now user submissions can equip wooden pickaxes!

- Issue #246. There is no more reward loop in obtain diamond! Scores on the leaderboard will be updated soon.

- Issue #259. Rewards are now given for obtaining cobblestone!

- Issue #249. We have now fixed a major performance drop in Treechop. You can now get > 500 FPS with head on an Nvidia GPU.

That’s all, but expect new updates soon! Thank you for your patience!

[Announcement] Competition timeline moved back!

Over 6 years agoHey all,

Hope all is going well! Due to the number of times we had previously moved the competition launch date back, we have decided to push the competition timeline back to allow you more time to compete in Round 1. Importantly,

- We’ve pushed the Round 1 end date to October 25th.

- The Round 2 end date is now November 25th.

- In addition, we will award a travel grant to representitives of all finalist teams in Round 2, to allow for travel to be updated for NeurIPS!

Really excited to see your submissions!

P.S. Expect some new updates to MineRL coming soon!

The MineRL Team

Official Updated Timeline

May 10, 2019: Applications for Grants Open . Participants can apply to receive travel grants and/or compute grants.

Jun 8, 2019: First Round Begins . Participants invited to download starting materials and to begin developing their submission.

Jun 26, 2019: Application for Compute Grants Closes . Participants can no longer apply for compute grants.

Jul 8, 2019: Notification of Compute Grant Winners . Participants notified about whether they have received a compute grant.

Oct 1, 2019 (UTC 23:00): _Inclusion@NeurIPS Travel Grant Application Closes _. Participants can no longer apply for travel grants.

Oct 9, 2019 Travel Grant Winners Notified . Winners of Inclusion@NeurIPS travel grants are notified.

Sep 22, 2019 Oct 25, 2019 (UTC 12:00): First Round Ends . Submissions for consideration into entry into the final round are closed. Models will be evaluated by organizers and partners.

Sep 27, 2019 Oct 30, 2019: First Round Results Posted . Official results will be posted notifying finalists.

Nov 1, 2019: Final Round Begins . Finalists are invited to submit their models against the held out validation texture pack to ensure their models generalize well.

Nov 25, 2019: Final Round Closed . Submissions for finalists are closed, evaluations are completed, and organizers begin reviewing submissions.

Dec 6, 2019: Special Awards Posted . Additional awards granted by the advisory committee are posted.

Dec 6, 2019: Final Round Results Posted . Official results of model training and evaluation are posted.

Dec 8, 2019: NeurIPS 2019 ! All Round 2 teams invited to the conference to present their results.

[Announcement] Submissions for Round 1 now open!

Over 6 years ago

NeurIPS 2019 Competition Submissions Open

NeurIPS 2019 Competition Submissions Open

We are so excited to announce that Round 1 of the MineRL NeurIPS 2019 Competition is now open for submissions! Our partners at AIcrowd just released their competition submission starter kit that you can find here.

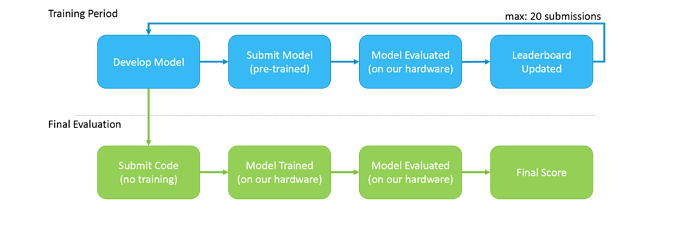

Here’s how you submit in Round 1 :

- Sign up to join the competition on the AIcrowd website.

- Clone the AIcrowd starter template and start developing your submissions.

- Submit an agent to the leaderboard:

- Train your agents locally (or on Azure) in under 8,000,000 samples over 4 days . Participants should use hardware no more powerful than NG6v2 instances on Azure (6 CPU cores, 112 GiB RAM, 736 GiB SDD, and a NVIDIA P100 GPU.)

- Push your repository to AIcrowd GitLab , which verifies that it can successfully be re-trained by the organizers at the end of Round 1 and then runs the test entrypoint to evaluate the trained agent’s performance!

Once the full evaluation of the uploaded model/code is done, the participant’s submission will appear on the leaderboard!

» Get started now! «

- MineRL Competition Page - Main registration page & leaderboard.

- Submission Starter Template - Starting code for submisions and guide to submit!

-

Example Baselines - A set of competition and non-competition baselines for

minerl.

Сreating and using my own dataset

Over 6 years agoWell additionally, we use a different hold-out hidden texture during round 2 so even if you trained on your own dataset, it would damage your performance in round 2. That’s the main reason behind this rule!

Env.step() slowly with xvfb-run

Over 6 years ago@haozheng_li I wonder if ChainerRL doesn’t recognize your GPU on your Linux box?

Env.step() slowly with xvfb-run

Over 6 years ago@haozheng_li Are you calling env.render()? Can you see how fast this runs:

import minerl

import gym

env = gym.make('MineRLNavigateDense-v0')

obs = env.reset()

done = False

net_reward = 0

while not done:

action = env.action_space.noop()

action['camera'] = [0, 0.03*obs["compassAngle"]]

action['back'] = 0

action['forward'] = 1

action['jump'] = 1

action['attack'] = 1

obs, reward, done, info = env.step(

action)

net_reward += reward

print("Total reward: ", net_reward)

First gym.make() stucked in downloading MixinGradle-dcfaf61.jar

Over 6 years agoI’m so sorry @everyone, I didn’t realize how often people wouldn’t have access to an internet connection.

Once I fix some more of the pressing race conditions and make documentation on how to a) dockerize b) run headless/over SSH, I’ll work on this

We’re so close to having a decent Minecraft RL environment!

Sample efficiency and human dataset

Over 6 years agoIn this competition we are talking about sampling from the environment; you can use as many of the human samples as many times as you want.

The motivation is this: it is far more feasible to collect a bunch of humans driving cars than to run \epsilon-greedy random exploration for some RL algorithm with a real car. Hence we limit the number of samples you get with the real car to 8,000,000, and encourage you to use the dataset as much as possible.

Hope this helps!

[Announcement] MineRL 0.2.3 Released!

Over 6 years ago

MineRL 0.2.3 released (update now)!

MineRL 0.2.3 released (update now)!

We’re back at it again with another massive update to minerl; say hello to seeding!

The package is now on minerl-0.2.3, and we have released a new version of To upgrade:

pip3 install --upgrade minerl

Changes in minerl-0.2.3:

-

Adding environment seeding! You can now use

env.seed(3858)beforeenv.reset()to set th environment seed. Closes #14. -

Breaking change!

env.reset()now only returns a single objectobsand notobs, infoso as to conform with the OpenAI Gym standard. -

Fixed an issue where Navigate target would sometimes be underneath the ocean. Closes #162.

-

Fixed an issue where the a blacklisted file showed up in the data!

-

Disable the GUI during episode of MineRL!

-

Fixed an incompatibility with python3.5 where dictionaries would be out of order!

-

Added feature which allows the base port to be configured.

-

Added a feature to set the maximum number of instances allowed for the competition evaluation phase.

-

Removed a buggy download continuation feature. Closes #142.

-

Added documentation and a fix for a multiprocessing 'freeze_support` bug on windows. Closes #145.

It is taking very long to launch first envoirenment

Over 6 years agoHi, I’m currently investigating, Could you make a github issue?

Env.step() slowly with xvfb-run

Over 6 years ago@haozheng, I don’t think it has anything to do with xvfb, it’s probably the speed of the train loop on DQN as you had mentioned, but not an interaction between the two. It takes a bit of time to feed-forward/backprop a net etc.

Env.step() slowly with xvfb-run

Over 6 years agoAn FPS of 6 seems really slow. Could you tell me about the hardware of your linux machine?

It is taking very long to launch first envoirenment

Over 6 years agoHey I’d just like to follow up here

Cannot make env successfully every time

Over 6 years agoHi there, I beleive we have fixed this! This is an issue on windows where sometimes if you are running multiprocess it wont fork.

If you experience this bug again, you can read more here on how to fix it:

[Announcement] MineRL 0.2.0 + Baselines! UPDATE FOR FIXED DATA 💯

Over 6 years ago@BrandonHoughton Any progress on this?

[Announcement] MineRL 0.3.4 Released!

Over 5 years ago@everyone

MineRL 0.3.4 has been released!

MineRL 0.3.4 has been released!

We’re working hard to get the finishing touches for the competition ready and are so excited to bring you a new version of MineRL.

In this update we’ve added

minerl.interactor, a new tool enabling humans to interact directly with agents during an episode! (See video below)You can find a tutorial in the docs here (https://minerl.io/docs/tutorials/minerl_tools.html#interactive-mode-minerl-interactor)

Get the new package with

pip3 install --upgrade minerlMajor Updates:

minerl.viewerenabling human AI interaction!