LifeCLEF 2018 Bird - Monophone

Bird sounds recognition from monodirectional recordings

Note: This challenge is one of the two subtasks of the LifeCLEF Bird identification challenge 2018. For more information about the other subtask click here . Both challenges share the same training dataset.

Challenge description

The goal of the task is to identify the species of the most audible bird (i.e. the one that was intended to be recorded) in each of the provided test recordings. Therefore, the evaluated systems have to return a ranked list of possible species for each of the 12,347 test recordings. Each prediction item (i.e. each line of the file to be submitted) has to respect the following format: < MediaId;ClassId;Probability;Rank>

Here is a short fake run example respecting this format on only 3 test MediaId: monophone_fake_run

Each participating group is allowed to submit up to 4 runs built from different methods. Semi-supervised, interactive or crowdsourced approaches are allowed but will be compared independently from fully automatic methods. Any human assistance in the processing of the test queries has therefore to be signaled in the submitted runs.

Participants are allowed to use any of the provided metadata complementary to the audio content (.wav 44.1, 48 kHz or 96 kHz sampling rate), and will also be allowed to use any external training data but at the condition that (i) the experiment is entirely re-producible, i.e. that the used external resource is clearly referenced and accessible to any other research group in the world, (ii) participants submit at least one run without external training data so that we can study the contribution of such resources, (iii) the additional resource does not contain any of the test observations. It is in particular strictly forbidden to crawl training data from: www.xeno-canto.org

Data

The data collection will be the same as the one used in BirdCLEF 2017, mostly based on the contributions of the Xeno-Canto network. The training set contains 36,496 recordings covering 1500 species of central and south America (the largest bioacoustic dataset in the literature). It has a massive class imbalance with a minimum of four recordings for Laniocera rufescens and a maximum of 160 recordings for Henicorhina leucophrys. Recordings are associated to various metadata such as the type of sound (call, song, alarm, flight, etc.), the date, the location, textual comments of the authors, multilingual common names and collaborative quality ratings. The test set contains 12,347 recordings of the same type (mono-phone recordings). More details about that data can be found in the overview working note of BirdCLEF 2017 .

Submission instructions

As soon as the submission is open, you will find a “Create Submission” button on this page (just next to the tabs)

More information will be available soon.

Evaluation criteria

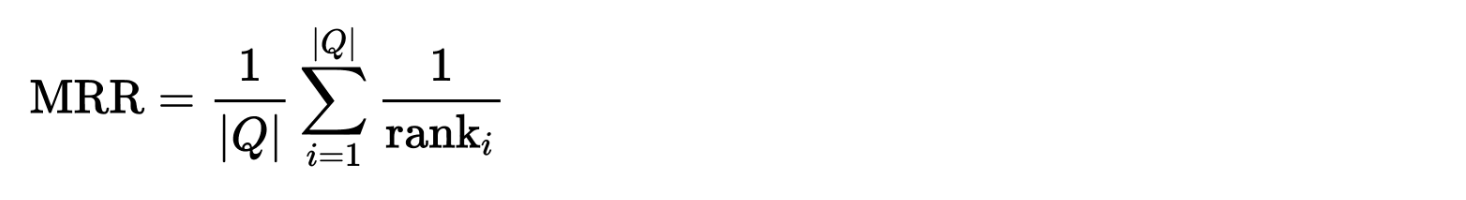

The used metric will be the Mean Reciprocal Rank (MRR). The MRR is a statistic measure for evaluating any process that produces a list of possible responses to a sample of queries ordered by probability of correctness. The reciprocal rank of a query response is the multiplicative inverse of the rank of the first correct answer. The MRR is the average of the reciprocal ranks for the whole test set:

| where | Q | is the total number of query occurrences in the test set. |

Resources

Contact us

- Technical issues : https://gitter.im/crowdAI/lifeclef-2018-bird-monophone

- Discussion Forum : https://www.crowdai.org/challenges/lifeclef-2018-bird-monophone/topics

We strongly encourage you to use the public channels mentioned above for communications between the participants and the organizers. In extreme cases, if there are any queries or comments that you would like to make using a private communication channel, then you can send us an email at :

- Sharada Prasanna Mohanty: sharada.mohanty@epfl.ch

- Hervé Glotin: glotin[AT]univ-tln[DOT]fr

- Hervé Goëau: herve[DOT]goeau[AT]cirad[DOT]fr

- Alexis Joly: alexis[DOT]joly[AT]inria[DOT]fr

- Ivan Eggel: ivan[DOT]eggel[AT]hevs[DOT]ch

More information

You can find additional information on the challenge here: http://imageclef.org/node/230

Baseline Repository

You can find a baseline system and a continuative tutorial can be found here: https://github.com/kahst/BirdCLEF-Baseline

We encourage all participants of the challenge to build upon the provided code base and share the results for future reference.

Results (tables and figures)

(Official round during the LifeCLEF 2018 campaign)

Prizes

LifeCLEF 2018 is an evaluation campaign that is being organized as part of the CLEF initiative labs. The campaign offers several research tasks that welcome participation from teams around the world. The results of the campaign appear in the working notes proceedings, published by CEUR Workshop Proceedings (CEUR-WS.org). Selected contributions among the participants, will be invited for publication in the following year in the Springer Lecture Notes in Computer Science (LNCS) together with the annual lab overviews.

Datasets License

Participants

Continue with Google

Continue with Google

Sign Up with Email

Sign Up with Email