NeurIPS 2019: Learn to Move - Walk Around

Reinforcement Learning on Musculoskeletal Models

Announcements

Best Performance Track (leaderboard):

Winner: PARL

- Score: 1490.073

- Video

- Test code

Second Place: scitator

- Score: 1346.939

- Video

Third Place: SimBodyWithDummyPlug

- Score: 1303.727

- Video

Machine Learning Track:

The best and finalist papers are accepted to the NeurIPS 2019 Deep Reinforcement Learning Workshop

Best Paper:

- Efficient and robust reinforcement learning with uncertainty-based value expansion, [PARL] Bo Zhou, Hongsheng Zeng, Fan Wang, Yunxiang Li, and Hao Tian

Finalists:

- Distributed Soft Actor-Critic with Multivariate Reward Representation and Knowledge Distillation, [SimBodyWithDummyPlug] Dmitry Akimov

- Sample Efficient Ensemble Learning with Catalyst.RL, [scitator] Sergey Kolesnikov and Valentin Khrulkov

Reviewers provided throughtful feedback

- Yunfei Bai (Google X), Glen Berseth (UC Berkeley), Nuttapong Chentanez (NVIDIA), Sehoon Ha (Google Brain), Jemin Hwangbo (ETH Zurich), Seunghwan Lee (Seoul National University), Libin Liu (DeepMotion), Josh Merel (DeepMind), Jie Tan (Google)

And the review board selected the papers

- Xue Bin (Jason) Peng (UC Berkeley), Seungmoon Song (Stanford University), Łukasz Kidziński (Stanford University), Sergey Levine (UC Berkeley)

Neuromechanics Track:

No winner was selected for the neuromechanics track.

Notification:

- OCT 26: Timeline is adjusted

- Paper submission due date: November 3

- Winners announcement: November 29

- Time zone: Anywhere on Earth

- OCT 18: Submission for Round 2 is open!!

- We only accept docker submissions

- Evaluation will be done with osim-rl v3.0.9v3.0.11

- All submssions will be re-evaluated with the last version

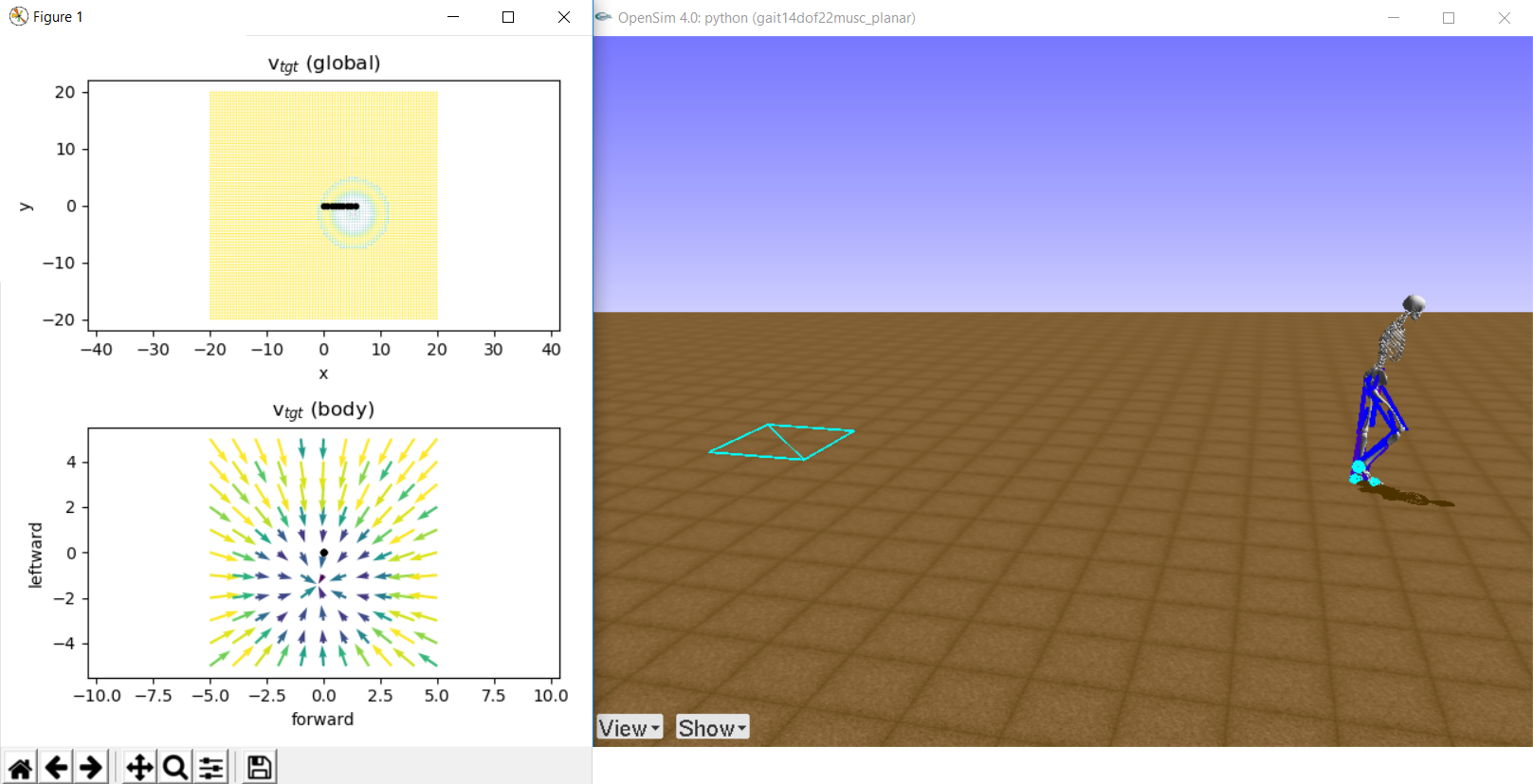

- OCT 6: Reward function for Round 2

- Code

- Target velocity test code

- Documentation

- Forum

- AUG 5: New submission option available

- AUG 5: Example code of training an arm model

- AUG 5: Google Cloud Credits to first 200 teams

- JUL 17: Evaluation environment for Round 1 is set as difficulty=2, model='3D', project=True, and obs_as_dict=True

- JUL 17: Competition Tracks and PrizesSimulation update:

- OCT 20: [v3.0.11] Round 2: difficulty=3, model='3D', project=True, obs_as_dict=True

- OCT 18: [v3.0.9] Round 2

- AUG 9: [v3.0.6] success reward fixed

- AUG 5: [v3.0.5] observation_space updated

- JUL 27: observation_space updated

- JUN 28: observation_space defined

- JUN 15: action space reordered

Learn to Move- Walk Around

Welcome to the Learn to Move: Walk Around challenge, one of the official challenges in the NeurIPS 2019 Competition Track. Your task is to develop a controller for a physiologically plausible 3D human model to walk or run following velocity commands with minimum effort. You are provided with a human musculoskeletal model and a physics-based simulation environment, OpenSim. There will be three tracks: 1) Best performance, 2) Novel ML solution, and 3) Novel biomechanical solution, where all the winners of each track will be awarded.

Get Started

- Download code at the osim-rl github repo.

- Find details on the task and environment at the osim-rl project page: L2M2019 - Environment.

- Submit your solution

- Option 1: submit solution in docker container

- Option 2: run controller on server environment

Competition Tracks and Prizes

- Best Performance Track

- Rank in the top 50 of Round 1

- Get the highest score in Round 2

- Prizes for the winner:

- NVIDIA GPU

- Travel grant (up to $1,000 to NeurIPS 2019 or Stanford)

- ML (Machine Learning) Track (find more details here)

- Rank in the top 50 of Round 1

- Submit a paper describing your machine learning approach in NeurIPS format

- We will select the best paper

- Prizes for the winner:

- 2x NVIDIA GPU

- Travel grant (up to $1,000 to NeurIPS 2019 or Stanford)

- Paper acceptance into the NeurIPS Deep RL workshop

- NM (Neuromechanics) Track (find more details here)

- Rank in the top 50 of Round 1

- Submit a paper describing your novel neuromechanical findings/approach in Journal of NeuroEngineering and Rehabilitation format

- We will select the best paper

- Prizes for the winner:

- Xsens MVN Awinda & MVN Analyze software (1 year license)

- Travel grant (up to $1,000 to NeurIPS 2019 or Stanford)

- Paper acceptance (upon revision) to the Journal of NeuroEngineering and Rehabilitation with no publication fee

- Other support

- Top 50 of Round 1: $500 Google Cloud credit

- First 200 submissions: $200 Google Cloud credit

- Credits will be sent out to the new teams every weekend

Timeline

- Round 1: June 6 ~ October 13, 2019

- Get in top 50 to proceed to Round 2

- Evaluation will be done with model=3D and difficulty=2

- Round 2: October 14 ~ 27, 2019

- See notification at the top of the page

- Paper submission for Track 2 and 3: October 14 ~ 27 November 3, 2019

- Winners announcement: November 22 29, 2019

Resources

- osim-rl project page

- Additional tools:

- Walking controller 1, a physiologically plausible simple control model

- Human gait data

- Want to collaborate with experts in different fields? Find team mates at our forum

- Chat live with other participants on Gitter

Sponsors and Partners

More Info

Contact Us

- Seungmoon Song, smsong@stanford.edu

- Łukasz Kidziński, lukasz.kidzinski@stanford.edu

Past competitions

Media

Participants

Leaderboard

| 01 |

PARL

PARL

|

1490.073 |

| 02 |

scitator

scitator

|

1346.939 |

| 03 |

|

1303.727 |

| 04 |

|

285.268 |

| 05 |

|

238.993 |

Continue with Google

Continue with Google

Sign Up with Email

Sign Up with Email