NIPS 2018 : Adversarial Vision Challenge (Untargeted Attack Track)

HiddenWelcome to the Adversarial Vision Challenge, one of the official challenges in the NIPS 2018 competition track. In this competition you can take on the role of an attacker or a defender (or both). As a defender you are trying to build a visual object classifier that is as robust to image perturbations as possible. As an attacker, your task is to find the smallest possible image perturbations that will fool a classifier.

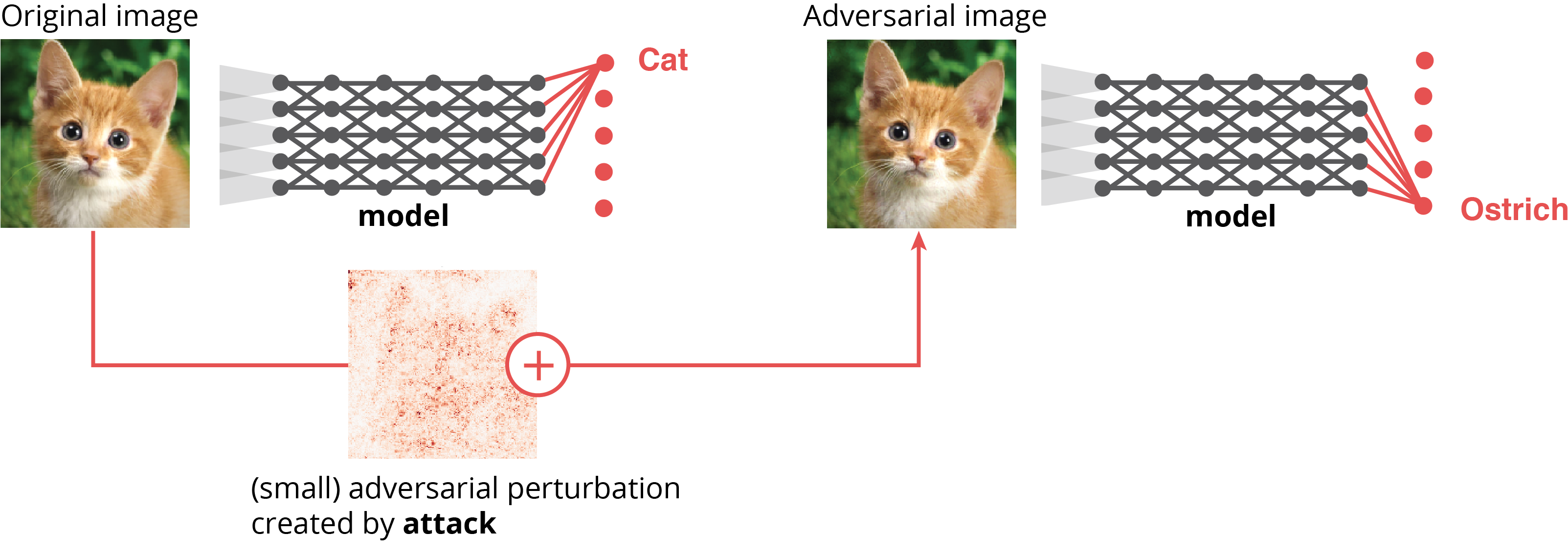

The overall goal of this challenge is to facilitate measurable progress towards robust machine vision models and more generally applicable adversarial attacks. As of right now, modern machine vision algorithms are extremely susceptible to small and almost imperceptible perturbations of their inputs (so-called adversarial examples). This property reveals an astonishing difference in the information processing of humans and machines and raises security concerns for many deployed machine vision systems like autonomous cars. Improving the robustness of vision algorithms is thus important to close the gap between human and machine perception and to enable safety-critical applications.

Competition tracks

There will be three tracks in which you and your team can compete:

In this track you build an attack algorithm that breaks the defenses. For each model and each given image your attack tries to find the smallest perturbation that makes the model predict a wrong class label (so-called adversarial perturbations). Your attack will be able to craft model-specific adversarials by asking the model for its prediction on self-defined inputs (up to 1000 times / image). The smaller the adversarial perturbations are that your attack finds (on average), the better is your score (the exact scoring formula will be published soon).

Evaluation criterion

Attacks are scored as follows (lower is better):

- Let A be the attack and S be the set of samples.

- We apply attack A against the best five models for each sample in S.

- If an attack fails to produce a (targeted) adversarial for a given sample, then we register a worst case distance (distance of the sample to a uniform grey image).

- The final attack score is the median L2 distance across samples.

The top-5 models against which submissions are evaluated are fixed for two weeks at a time after which we evaluate all current submissions to determine the new top-5 models for the upcoming two weeks.

Submissions

To make a submission, please follow the instructions in this GitLab repository: https://gitlab.crowdai.org/adversarial-vision-challenge/nips18-avc-attack-template

Fork the above template repository in GitLab and follow the instructions stated in the README.md. You need to have a crowdAI-account and sign in to GitLab using this account. In the README you will also find links to multiple fully functional examples.

Timeline

(tentative).

* June 25th, 2018 : Challenge begins

* November 1st : Final submission date

* November 15th : Winners Announced

Organizing Team

The organizing team comes from multiple groups — University of Tübingen, Google Brain, EPFL and Pennsylvania State University.

The Team consists of:

* Wieland Brendel

* Jonas Rauber

* Alexey Kurakin

* Nicolas Papernot

* Behar Veliqi

* Sharada P. Mohanty

* Marcel Salathé

* Matthias Bethge

Sponsors

|

|

Evaluation criteria

Resources

Contact Us

- Gitter Channel : crowdAI/nips-2018-adversarial-vision-challenge

- Discussion Forum : https://www.crowdai.org/challenges/nips-2018-adversarial-vision-challenge/topics

We strongly encourage you to use the public channels mentioned above for communications between the participants and the organizers. In extreme cases, if there are any queries or comments that you would like to make using a private communication channel, then you can send us an email at :

Prizes

- $15.000 worth of Paperspace cloud compute credits: The top-20 teams in each track (defense, untargeted attack, targeted attack) as of 28. September will receive 250$ each.

Datasets License

Participants

Leaderboard

| 01 |

|

1.600 |

| 02 |

|

1.639 |

| 03 |

|

2.124 |

| 04 |

|

2.224 |

| 05 |

|

2.293 |

Continue with Google

Continue with Google

Sign Up with Email

Sign Up with Email